-

Server

Hãng sản xuấtTheo cấu hình

Hãng sản xuấtTheo cấu hình -

Workstation

Hãng sản xuấtWorkstation

Hãng sản xuấtWorkstation - Storage

-

Parts

Hãng sản xuấtTheo dòng máy chủ

-

Networking

Hãng sản xuất

Hãng sản xuất - License

-

Giải pháp

- Blog

- Thông tin

- Liên hệ

Danh mục

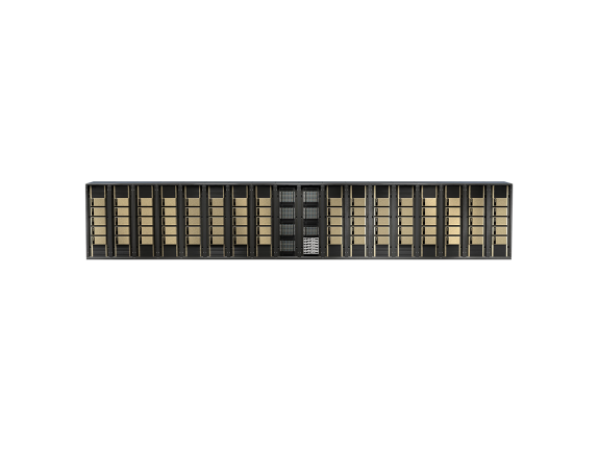

Hệ thống siêu máy tính AI NVIDIA DGX SuperPOD GB200

- Nhà sản xuất: NVIDIA

- Model No. GB200

- Tình trạng: Đặt trước

Giá bán: Call

NVKD sẽ liên hệ lại ngay

Thông tin sản phẩm Hệ thống siêu máy tính AI NVIDIA DGX SuperPOD GB200

Enterprise Infrastructure for Mission-Critical AI

NVIDIA DGX SuperPOD™ with DGX GB200 systems is purpose-built for training and inferencing trillion-parameter Generative AI models. Each liquid-cooled rack features 36 NVIDIA GB200 Grace Blackwell Superchips–36 NVIDIA Grace CPUs and 72 Blackwell GPUs–connected as one with NVIDIA NVLink. Multiple racks connect with NVIDIA Quantum InfiniBand to scale up to tens of thousands of GB200 Superchips.

→ Giới thiệu chi tiết NVIDIA DGX SuperPOD

→ Datasheet NVIDIA DGX SuperPOD GB200

SPECIFICATIONS

| Configuration | 36 Grace CPU : 72 Blackwell GPUs | 1 Grace CPU : 2 Blackwell GPU |

| FP4 Tensor Core2 | 1,440 PFLOPS | 40 PFLOPS |

| FP8/FP6 Tensor Core2 | 720 PFLOPS | 20 PFLOPS |

| INT8 Tensor Core2 | 720 POPS | 20 POPS |

| FP16/BF16 Tensor Core2 | 360 PFLOPS | 10 PFLOPS |

| TF32 Tensor Core2 | 180 PFLOPS | 5 PFLOPS |

| FP64 Tensor Core2 | 3,240 TFLOPS | 90 TFLOPS |

| GPU Memory | Bandwidth | Up to 13.5 TB HBM3e | 576 TB/s | Up to 384 GB HBM3e | 16 TB/s |

| NVLink Bandwidth | 130TB/s | 3.6TB/s |

| CPU Core Count | 2,592 Arm® Neoverse V2 cores | 72 Arm Neoverse V2 cores |

| CPU Memory | Bandwidth | Up to 17 TB LPDDR5X | Up to 18.4 TB/s | Up to 480GB LPDDR5X | Up to 512 GB/s |

Xem thêm

Sản phẩm liên quan(4)

Copyright © 2025 thegioimaychu.vn. All Rights Reserved.