-

Server

Hãng sản xuấtTheo cấu hình

Hãng sản xuấtTheo cấu hình -

Workstation

Hãng sản xuấtWorkstationDeep Learning

Hãng sản xuấtWorkstationDeep Learning -

Storage

Hãng sản xuất

Hãng sản xuất -

Parts

Hãng sản xuấtTheo dòng máy chủ

-

Networking

Hãng sản xuất

Hãng sản xuất - License

-

Giải pháp

- Blog

-

Thông tin

Về chúng tôi

- Liên hệ

Danh mục

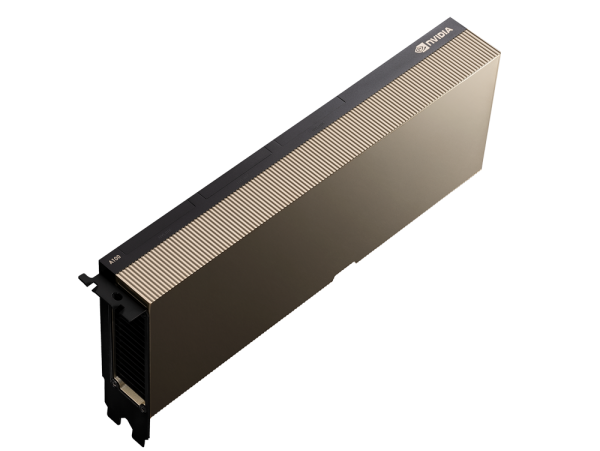

GPU NVIDIA H100 80GB PCIe 5.0 Passive Cooling

- Nhà sản xuất: NVIDIA

- Mã sản phẩm/Part No. TM9194 / H100

- Tình trạng: Liên hệ đặt hàng

Giá bán: Call

NVKD sẽ liên hệ lại ngay

Thông tin sản phẩm GPU NVIDIA H100 80GB PCIe 5.0 Passive Cooling

Unprecedented performance, scalability, and security for every data center.

The NVIDIA H100 is an integral part of the NVIDIA data center platform. Built for AI, HPC, and data analytics, the platform accelerates over 3,000 applications, and is available everywhere from data center to edge, delivering both dramatic performance gains and cost-saving opportunities.

Specifications

|

Form Factor |

H100 SXM |

H100 PCIe |

H100 NVL1 |

|

FP64 |

34 teraFLOPS |

26 teraFLOPS |

68 teraFLOPs |

|

FP64 Tensor Core |

67 teraFLOPS |

51 teraFLOPS |

134 teraFLOPs |

|

FP32 |

67 teraFLOPS |

51 teraFLOPS |

134 teraFLOPs |

|

TF32 Tensor Core |

989 teraFLOPS2 |

756 teraFLOPS2 |

1,979 teraFLOPs2 |

|

BFLOAT16 Tensor Core |

1,979 teraFLOPS2 |

1,513 teraFLOPS2 |

3,958 teraFLOPs2 |

|

FP16 Tensor Core |

1,979 teraFLOPS2 |

1,513 teraFLOPS2 |

3,958 teraFLOPs2 |

|

FP8 Tensor Core |

3,958 teraFLOPS2 |

3,026 teraFLOPS2 |

7,916 teraFLOPs2 |

|

INT8 Tensor Core |

3,958 TOPS2 |

3,026 TOPS2 |

7,916 TOPS2 |

|

GPU memory |

80GB |

80GB |

188GB |

|

GPU memory bandwidth |

3.35TB/s |

2TB/s |

7.8TB/s3 |

|

Decoders |

7 NVDEC |

7 NVDEC |

14 NVDEC |

|

Max thermal design

power (TDP) |

Up to 700W (configurable) |

300-350W (configurable) |

2x 350-400W |

|

Multi-Instance GPUs |

Up to 7 MIGS @ 10GB each |

Up to 14 MIGS @ 12GB |

|

|

Form factor |

SXM |

PCIe |

2x PCIe |

|

Interconnect |

NVLink: 900GB/s PCIe Gen5: 128GB/s |

NVLink: 600GB/s |

NVLink: 600GB/s |

|

Server options |

NVIDIA HGX H100 Partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs NVIDIA DGX H100 with 8 GPUs |

Partner and |

Partner and |

|

NVIDIA AI Enterprise |

Add-on |

Included |

Add-on |

- NVIDIA H100 GPU Datasheet

Xem thêm

TƯ VẤN - MUA HÀNG - BẢO HÀNH

Văn phòng chính Hồ Chí Minh

Văn phòng chính Hồ Chí Minh

+84 2854 333 338 - Fax: +84 28 54 319 903

Chi nhánh NTC Đà Nẵng

Chi nhánh NTC Đà Nẵng

+84 236 3572 189 - Fax: +84 236 3572 223

Chi nhánh NTC Hà Nội

Chi nhánh NTC Hà Nội

+84 2437 737 715 - Fax: +84 2437 737 716

Copyright © 2017 thegioimaychu.vn. All Rights Reserved.